Implications of AI:

Artificial Intelligence (AI) is a rapidly evolving technology that has permeated almost every sector of our lives. From healthcare to business, AI has the potential to revolutionize the ways we live and operate. However, along with these advancements, AI has also raised multifaceted ethical, social, and economic challenges that need to be addressed.

This article provides a comprehensive exploration of these challenges, the significance of a robust ethical framework for AI, and the potential societal impacts of AI.

The Ethical Challenges of AI

Artificial intelligence has numerous and intricate ethical implications. They range from issues of bias and discrimination to privacy concerns and accountability.

AI and Bias

Bias in AI systems is one of the most significant ethical concerns. AI systems are trained using data, and if the data contains bias, the AI system’s decisions can also be biased. This can lead to discriminatory decisions that can have far-reaching effects.

The Nature of Bias in AI

Bias in AI originates from the data used to train the system. If the data contains inherent bias, the AI system will likely replicate it in its decisions. This can manifest in various forms, such as racial or gender bias, leading to discriminatory decisions. For instance, an AI system used in recruitment may favor male candidates over female candidates if the training data contains a higher proportion of male employees. Similarly, an AI system used in law enforcement may disproportionately target certain racial or ethnic groups if the training data contains inherent racial bias.

Case Studies of Bias in AI

Several real-world instances illustrate the pervasive issue of bias in AI.

- Goldman Sachs was investigated for allegedly using an AI algorithm that discriminated against women by granting larger credit limits to men than women on their Apple cards. [Reference]

- Optum, a health services and innovation company, was scrutinized by regulators for creating an algorithm that allegedly recommended that doctors and nurses pay more attention to white patients than to sicker black patients. [Reference]

These cases underscore the importance of addressing bias in AI and the potential harm it can cause.

AI and Privacy

AI systems often require large amounts of data to function effectively. This reliance on data raises significant privacy concerns, as AI systems can potentially misuse or mishandle sensitive user data.

The Nature of Privacy Concerns in AI

AI systems, particularly those in the fields of healthcare and finance, often require access to sensitive personal information. For example, an AI system used in healthcare may need access to a patient’s medical history to make accurate predictions or diagnoses. This data can include information about a patient’s physical health, mental health, genetics, and lifestyle. If this data is mishandled or misused, it can lead to serious privacy violations.

Furthermore, many AI systems are designed to continually learn and adapt by collecting and analyzing more data over time. This can lead to a situation where AI systems collect more data than necessary, further exacerbating privacy concerns.

Case Studies of Privacy Concerns in AI

There have been several high-profile cases of privacy concerns related to AI.

- IBM was sued by the city of Los Angeles for allegedly misappropriating data it collected with its ubiquitous weather app. [Reference]

- Google’s acquisition of Fitbit, a company that produces activity trackers, was scrutinized due to concerns about how Google would use the health data collected by Fitbit devices. [Reference]

These cases highlight the need for strong privacy protections when using AI systems.

AI and Accountability

Another significant ethical challenge posed by AI is the question of accountability. If an AI system makes a decision that results in harm, determining who is responsible can be complex. Is it the developers who designed the system, the users who employed the system, or the AI system itself? This question underscores the need for clear guidelines and regulations regarding AI accountability.

The Complexity of Accountability in AI

Accountability in AI is a complex issue due to the autonomous nature of many AI systems. Traditional models of accountability, which typically involve a clear actor making a decision, may not apply in cases where decisions are made by an AI system. The AI system may make decisions based on patterns it has learned from its training data, which may not be immediately understandable or predictable to humans.

Furthermore, the developers of an AI system may

not have foreseen the specific scenarios in which their system would be used. The users of an AI system may not fully understand how the system works or the potential risks associated with its use. The AI system itself, while capable of making decisions, does not have consciousness or intent in the way humans do.

Case Studies of Accountability in AI

Several cases highlight the complexity of accountability in AI.

- In 2018, an autonomous vehicle operated by Uber was involved in a fatal crash. The vehicle’s AI system failed to correctly identify a pedestrian crossing the road, leading to the pedestrian’s death. [Reference] In this case, who should be held accountable: Uber, the developers of the AI system, the safety driver who was supposed to oversee the vehicle’s operation, or the AI system itself?.

- In 2016, Microsoft launched an AI chatbot named Tay on Twitter. The chatbot was designed to learn from interactions with users and adapt its responses accordingly. However, within 24 hours of its launch, Tay began posting offensive and inappropriate tweets, leading Microsoft to take the chatbot offline. [Reference] In this case, who should be held accountable for the chatbot’s actions: Microsoft, the users who interacted with Tay, or the chatbot itself?.

These cases underscore the need for clear guidelines on AI accountability to ensure that those responsible for harm caused by AI systems can be held accountable.

The Social Implications of AI

Alongside the ethical challenges, AI also has significant social implications. AI could have a significant impact on society in both positive and negative ways.

Positive Social Implications of AI

AI has several potential benefits for society. It can augment professionals in various fields, automate tasks, and improve efficiency. For instance, AI can augment healthcare professionals by assisting with diagnosis, reducing the risk of infection for medical staff, and improving patient care.

Negative Social Implications of AI

Despite its benefits, AI also has potential negative impacts. The application of AI in fields like healthcare has imposed new requirements in the field of medical ethics. For example, the use of AI in diagnosis and treatment decisions raises questions about patient consent, privacy, and the potential for error. It also raises questions about the potential for job displacement due to automation.

The Economic Implications of AI

Artificial intelligence (AI) is not only transforming the way we live and work, but also the way we produce and consume goods and services. AI has the potential to generate significant economic benefits for individuals, businesses, and societies, but also to create new challenges and risks.

According to a report by McKinsey Global Institute, AI could contribute up to $13 trillion to the global economy by 2030, or about 1.2 percent additional GDP growth per year. This estimate is based on the adoption and absorption of five broad categories of AI technologies: computer vision, natural language, virtual assistants, robotic process automation, and advanced machine learning. The report also suggests that AI could widen the gap between high-performing and lagging countries, companies, and workers, as the benefits and costs of AI are likely to be unevenly distributed.

Some of the main economic impacts of AI are:

- Productivity improvement: AI can enhance the efficiency and effectiveness of various processes and tasks, such as data analysis, decision making, customer service, and quality control. AI can also enable new forms of innovation and creativity, such as generative AI, which can produce novel and original content, such as images, text, music, and code. AI can also augment human capabilities and complement human skills, such as problem solving, communication, and learning.

- Cost reduction: AI can lower the cost of production and delivery of goods and services, by automating routine and repetitive tasks, optimizing resource allocation, and reducing errors and waste. AI can also increase the availability and affordability of goods and services, by creating new markets and business models, such as platform-based and peer-to-peer economies, and by lowering the barriers to entry and access for consumers and producers.

- Employment and income changes: AI can create new jobs and income opportunities, by generating new demand for goods and services, and by requiring new skills and roles, such as AI developers, trainers, and explainers. AI can also displace existing jobs and income sources, by substituting human labor with machines, and by increasing the competition and concentration in the market. AI can also affect the quality and security of work, by changing the nature and conditions of work, such as the tasks, skills, hours, and wages.

- Social and environmental impacts: AI can have positive or negative effects on the social and environmental aspects of the economy, depending on how it is designed, used, and regulated. AI can improve the health, education, and well-being of people, by providing better diagnosis, treatment, and prevention of diseases, by enhancing the access and quality of education and learning, and by supporting the social inclusion and empowerment of marginalized groups. AI can also harm the health, education, and well-being of people, by creating ethical, legal, and moral dilemmas, such as privacy, security, bias, and accountability, by increasing the risk of manipulation, deception, and fraud, and by undermining the human dignity and autonomy. AI can also improve the sustainability and resilience of the environment, by reducing the carbon footprint and resource consumption, by monitoring and mitigating the effects of climate change and natural disasters, and by promoting the conservation and restoration of ecosystems. AI can also harm the sustainability and resilience of the environment, by increasing the energy and material demand, by generating new forms of pollution and waste, and by disrupting the natural balance and diversity of life.

As AI becomes more pervasive and powerful, it is essential to ensure that its economic impacts are aligned with the values and goals of society, and that its benefits are shared widely and fairly. This requires a collaborative and proactive approach from all stakeholders, including governments, businesses, civil society, and individuals, to develop and implement effective policies and practices that can foster the responsible and inclusive development and use of AI.

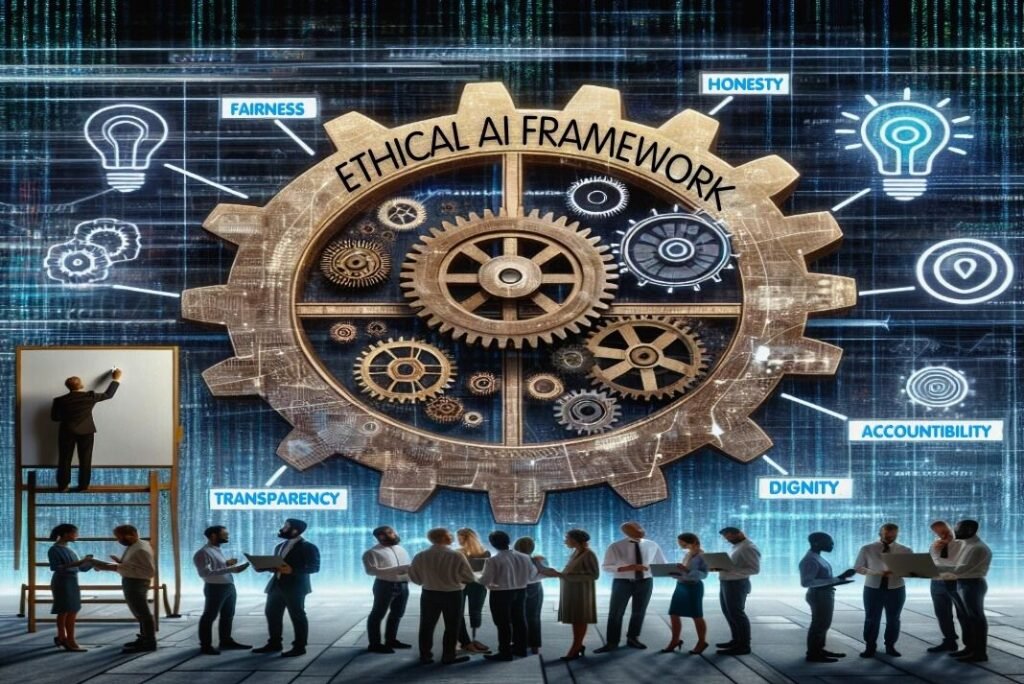

Building an Ethical AI Framework

Given the ethical challenges, social and economic implications associated with AI, it is crucial for companies to have a clear plan to deal with these issues. To operationalize data and AI ethics, companies should:

- Determine which current infrastructure an AI and data ethics program can use.

- Create a data and AI ethical risk framework that is tailored to their industry.

- Build organizational awareness about the ethical implications of AI.

- Provide employees with formal and informal incentives to assist in identifying ethical risks associated with AI.

- Monitor impacts and engage stakeholders.

This framework can help companies navigate the ethical landscape of AI and ensure that their AI systems are developed and used responsibly.

Conclusion

AI has the potential to reshape society in profound ways. However, the rapid advancement of AI also raises serious ethical, social, and economic concerns. These concerns, ranging from bias and discrimination to privacy and accountability, highlight the need for a robust ethical framework for AI.

As AI continues to evolve and permeate various sectors, it is crucial to address these issues proactively. We can only guarantee that AI advances rather than undermines humankind’s best interests by taking this action.

FAQs

Here are some frequently asked questions about the ethical, social, and economic implications of AI: